Meta AI has introduced a revolutionary open-source AI model, ImageBind, capable of learning from six distinct data types: images, text, audio, depth, thermal, and IMU data. Designed for an array of tasks, including cross-modal retrieval, arithmetic composition of modalities, cross-modal detection, and generation, this multimodal model is poised to make a substantial impact across numerous domains and ai tools.

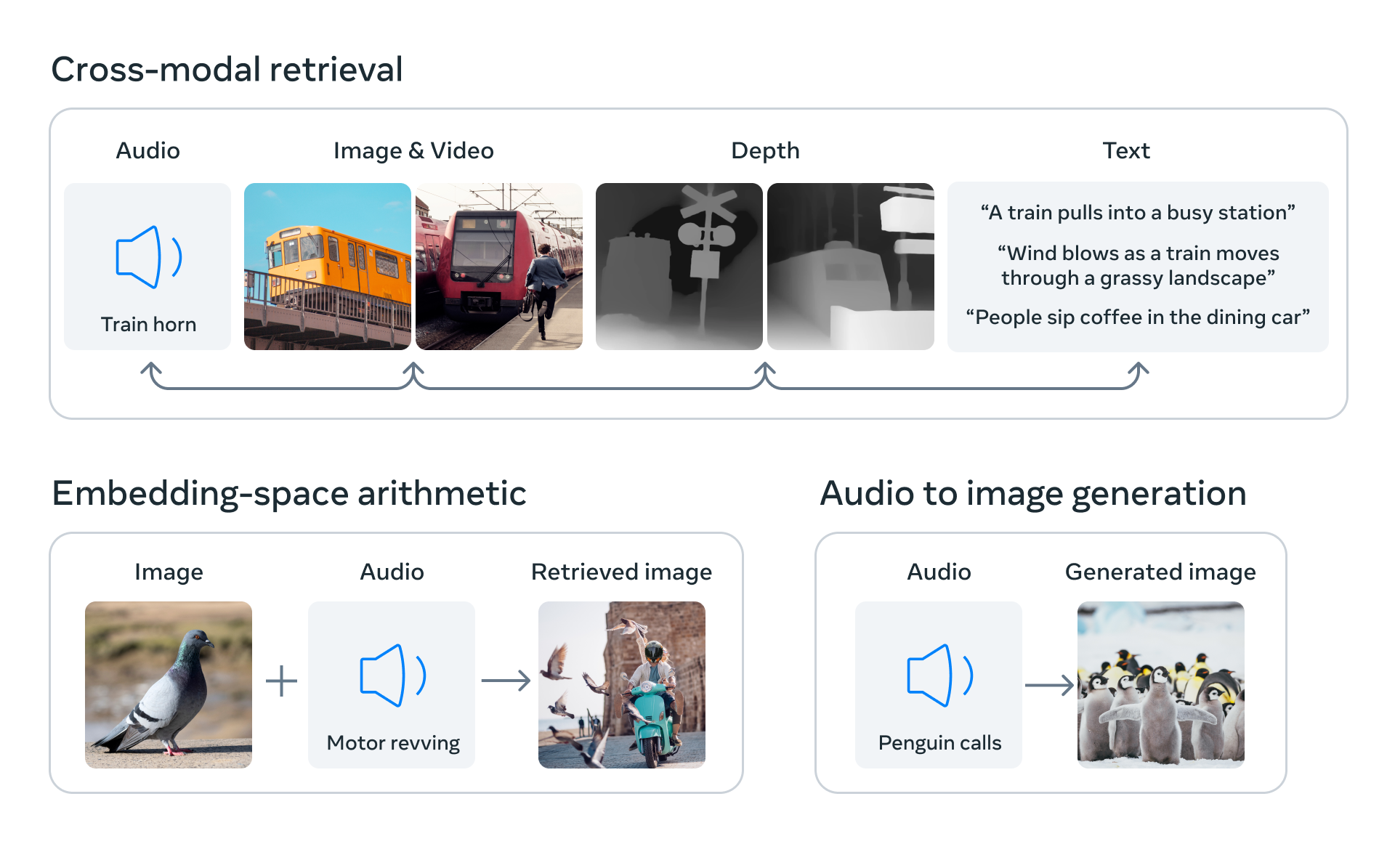

ImageBind establishes a method for synchronising embeddings from different modalities into a unified space. Introduced in a 2021 research paper, this method employs image alignment to train a joint embedding space exclusively using image alignment. By harmonising the embeddings of six modalities into a shared space, ImageBind facilitates cross-modal retrieval, demonstrating emergent alignment of modalities like audio, depth, or text that are not typically observed together.

Additionally, ImageBind enables audio-to-image generation by utilising its audio embeddings with a pre-trained DALLE-2 decoder, specifically designed to work with CLIP text embeddings. The resulting image is anticipated to be semantically related to the input audio clip. ImageBind holds potential applications across various industries that rely on multimodal data, including healthcare, entertainment, education, robotics, automotive, e-commerce, and gaming.

Ten Intriguing Facts about ImageBind

- Common space for different modalities: ImageBind aligns different modalities' embeddings into a shared space, simplifying work with multiple data types.

- Cross-modal retrieval: It enables emergent alignment of modalities such as audio, depth, or text that aren't observed together, allowing for efficient cross-modal retrieval.

- Composing semantics: Adding embeddings from different modalities naturally composes their semantics, further enhancing the model's capabilities.

- Audio-to-image generation: ImageBind enables audio-to-image generation by using its audio embeddings with a pre-trained DALLE-2 decoder designed to work with CLIP text embeddings.

- Image alignment training: ImageBind uses image alignment to train a joint embedding space, streamlining the process.

- Emergent alignment measurement: The method leads to emergent alignment across all modalities, measurable using cross-modal retrieval and text-based zero-shot tasks.

- Compositional multimodal tasks: ImageBind enables a rich set of compositional multimodal tasks across different modalities, opening up new possibilities in AI applications.

- Evaluating and upgrading pretrained models: IMAGE BIND provides a way to evaluate pretrained vision models for non-vision tasks and 'upgrade' models like DALLE-2 to use audio.

- Room for improvement: There are multiple ways to further improve ImageBind, such as enriching the image alignment loss by using other alignment data or other modalities paired with text or with each other (e.g., audio with IMU).

- Well-received research: The paper introducing ImageBind was published in 2021 and has been well-received in the research community for its innovative approach to multimodal learning and its potential applications in various fields such as computer vision and natural language processing.

Meta ImageBind: Holistic AI learning across six modalities Image: Meta

Expanding Horizons: ImageBind's Applications in Various Industries

Not only does ImageBind have the potential to transform virtual reality experiences, search engines, and computer interfaces, but it also has numerous applications in various industries that involve multimodal data:

- Healthcare: IMU-based text search enabled by ImageBind can streamline the process of finding relevant information in healthcare and activity search.

- Entertainment: Cross-modal retrieval enabled by ImageBind can enhance user experiences by retrieving relevant images, audio clips, or text descriptions for movies, TV shows, or music.

- Education: ImageBind can make learning more engaging and efficient by creating educational materials that combine different modalities such as images, audio, and text.

- Robotics: ImageBind can enable better perception and decision-making in various robotic applications by aligning different modalities' embeddings in robots' sensory data.

- Automotive: IMU-based text search enabled by ImageBind can improve vehicle performance and ensure passenger well-being by having applications in automotive activity search and safety monitoring.

- E-commerce: Cross-modal retrieval enabled by ImageBind can enhance the overall shopping experience for customers by retrieving relevant product images, audio descriptions, or reviews for online shopping.

- Gaming: ImageBind can take gaming to a whole new level by creating immersive gaming experiences that combine different modalities such as images, audio, and text.

These examples showcase just a few of the potential applications of ImageBind in various industries. As a relatively new method for multimodal learning, there may be many more potential use cases that have yet to be explored.

The Future of ImageBind and Its Applications

ImageBind is still under development, but it has immense potential to revolutionise various applications, including virtual reality experiences, search engines, and computer interfaces. Imagine using ImageBind to create more realistic and immersive virtual reality environments, improve search engine accuracy by retrieving images from text descriptions or generating text from images, and develop innovative ways of controlling computers through gestures and natural language.

The possibilities are truly astounding, and as ImageBind continues to evolve, we can expect even more exciting developments in the world of AI and multimodal learning. Meta AI's commitment to open-source research and knowledge sharing will undoubtedly accelerate progress in this area and pave the way for countless innovations. So, keep an eye out for ImageBind – it's poised to make a significant impact on how we interact with computers and the world around us!